As we have reached the midterm of the project, We are writing this post as a summary of what we have been working on and which are our next objectives. Our overall evaluation of our work is positive and we are excited about keep the coding and learning.

Theory and discussion

In one hand, we have been weekly discussing about the measures we had to implement, having debates about the information we could get from them and feasibility and practicality of these measures integrated in CPAC and in terms of development. Also, it has been a hard-work of researching and learning about new techniques and tools used in the field, linked not only to measures related to non-linear analysis of the series, but also to a wide variety of preprocessing techniques, optimization and python language learning. Our weekly discussions about data and integration of other imaging environments and other parts of CPAC (ie. FSL have been stimulating).

Coding

In the other hand, as a consequence of the previous point, we have accomplished the goal of implementing several measures, helper functions and tools building partially a toolbox for the analysis of non-linear information of series that will need triming and revision, on the principles of utility and complementarity. Organisation of these measures and outputs are also to come.

I have developed 4 working workflows of measures based on correlation and Information Theory between pairs of variables (Ivan has developed others complementing my work):

- Correlation WF

- Partial Correlation WF

- Mutual Information WF

- Transfer Entropy WF

Additionally, the toolbox has several measures and helper functions some more completed than others that we are polishing and comparing to select some as to include in the toolbox:

- Shannon entropy

- ApEn and SampEn (sampling entropies)

- Conditional entropies

- Entropy Correlation Coefficient

- Phase synchronization (as explained in my last entry)

- Phase Locking Values

- TE based in covariances for gaussian variables (no need of discretization).

- Entropy Correlation Coefficient

- Helper functions:

- Voxel-wise timeseries generator from fMRI data

- ROI timeseries generator from fMRI data

- Transform (BIC discretization of signals based on Equiquantization criteria and discretization formalisms by Ivan)

- Frequency filters and bands

- Phase extractors

And also a Granger Causality (GC) module (Implementing Pairwise Conditional GC and MultiVariate GC using a VAR model) that still is under last testings and bug-correcting phase with the following functions that can be found in GitHub:

- Granger Causality functions based on VAR model:

- tsdata_to_autocov: Timeseries to autocovariance matrix calculation

- autocov_to_var: Calculation of the coefficients and the residuals using the VAR model.

- autocov_to_pwcgc: Calculation of the Pairwise Granger Causality using the residuals previously calculated.

- autocov_to_mvgc: Calculation of the Multivariate Granger Causality using the residuals previously calculated.

Testing over real data

Finally, there has been an application of the developed measures to real fMRI data, having reported some preliminary results. These results can be found in previous entries of this blog:

Correlation, Entropies and Mutual Information, Transfer Entropy and Phase Synchrony.

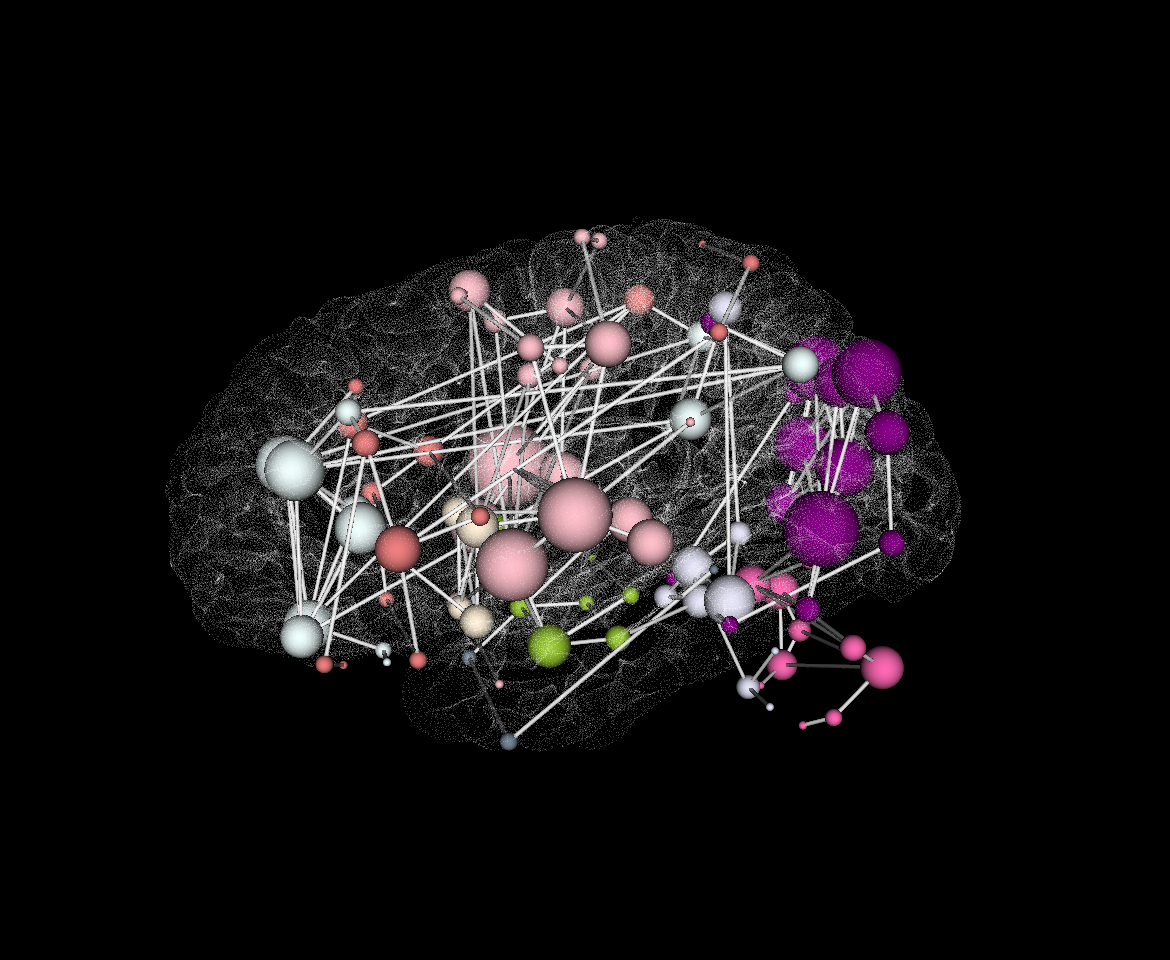

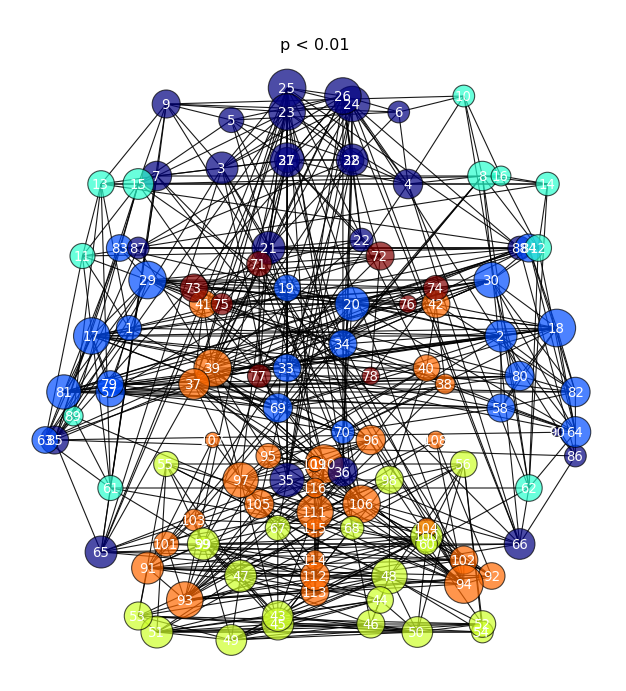

We are towards starting using extensively the NCoRR database for real data usage with the measures and establish the use of new atlases to extend the 90 ROIs that we have been working with to 512 or/and 1024 regions and voxel-wise for modelisation in our cluster. Also, we are dedicated to graphic representation and results for documentation. An basic idea idea of how we could represent our matrices using networkx capabilities is explained here as orientative, by taking correlation matrixes (could be any other measure’s bi-directional results matrix) and generating figures like these:

Next objectives

Even if we are successfully completing our initial plan, we have still work to do:

The obvious point is that testing these tools in real data and modelling them and their parameters for robustness and effective documentation, comparing also several situations and testing the code efficiency and stability.

Other practicalities I would like to point some TO-DO’s and objectives towards the second (and last) part of the project:

- Merging of both students repositories and having a copy of it in a cluster, allowing running studies on it.

- Solve code problems and developer strategies alike to CPAC already existing code (efficiency and some errors in measures).

- Work on the integration with CPAC (GUI building and dependencies).

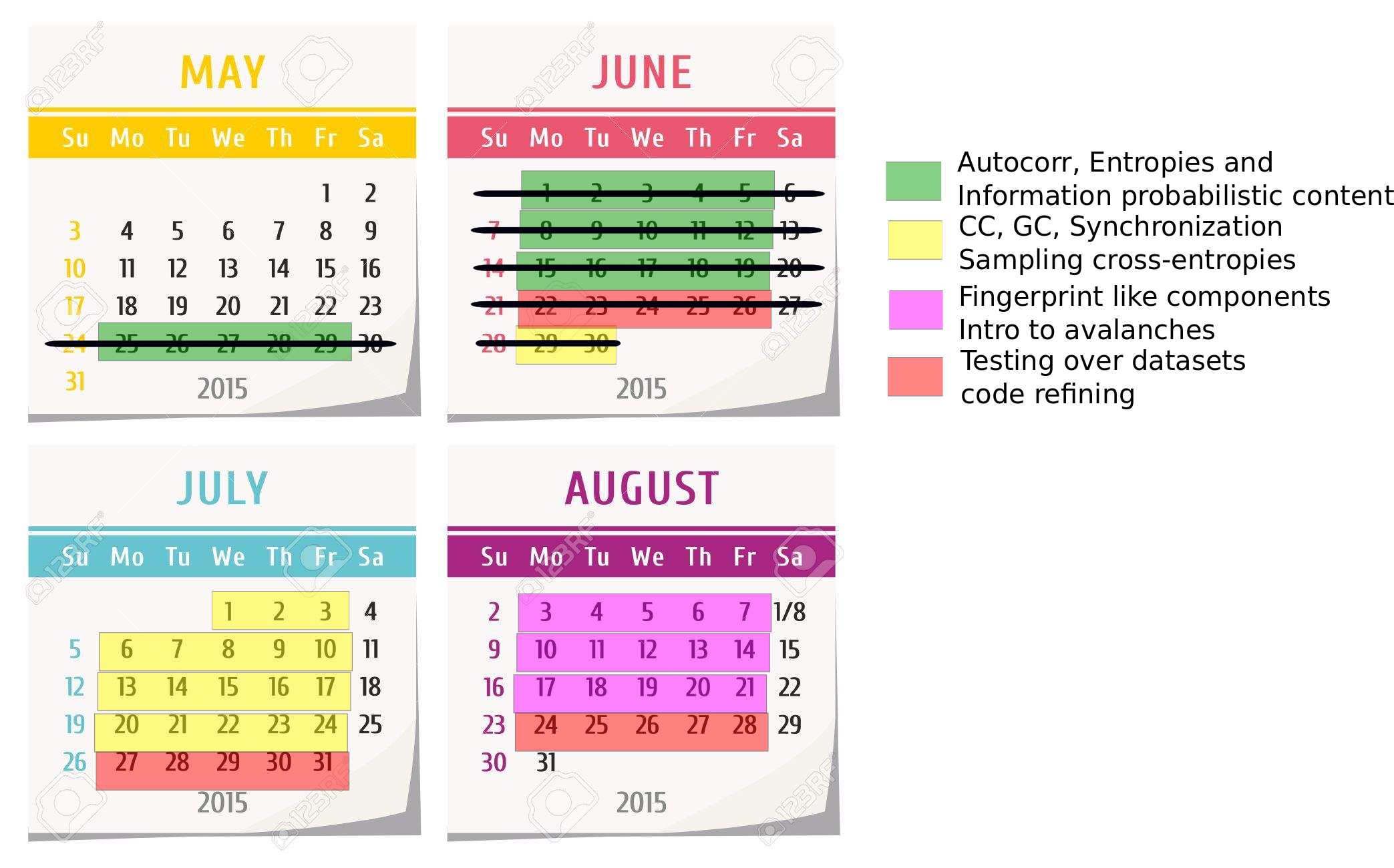

- Keep following the initial plan: Cross-entropies, Fingerprints and Avalanche-point process modelisation. Discuss extensively about features to add, most relevant measures and future aplications over real data.

- Implement measures of multiscale dynamics .

- We have now a primitive and easy version of calculating the numbers of bins via Equantization. We are discussing more advanced methods to do it and it is likely to develop an alternative.

- Related to frecuencies (develop using what we already have as a base):

- Implement Sample Entropies.

- Fingerprints -> Determine important frequencies. Use of the power spectrum.