Wiener-Granger causality (“G-causality”) is a statistical notion of causality applicable to time series data, whereby cause precedes, and helps predict, effect. For the purpose of analysing fMRI timeseries, we have developed as a first approach a series of python scripts to calculate the Multivariate Granger Causality (MVGC) based on the MVGC toolbox of Barnett & Seth [1]. The most common operationalisation of G-causality, and the one on which the MVGC Toolbox is based, utilises VAR (vector autoregression) modelling of time series data. But it is not the only one, and our intention is to explore other options too and extend to other options that could be more robust.

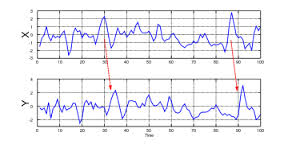

G-causality assumes two jointly distributed vector-valued stochastic processes (“variables”) X = X1 , X2 , …, Y = Y1 , Y2 , …. We say that X does not G-cause Y if and only if Y, conditional on its own past, is independent of the past of X; intuitively, past values of X yield no information about the current value of Y beyond information already contained in the past of Y itself. If, conversely, the past of X does convey information about the future of Y above and beyond all information contained in the past of Y then we say that X G-causes Y, as you can see in the image.

The steps to calculate MVGC are the following: We first have to compute the autocovariance matrix from the timeseries. After that, extract the coefficients with the VAR modelling and finally, perform the calculation of the MVGC. More detailed explanations and comments of the code on GitHub and the paper itself. In the following example, X is the timeseries matrix and q is the order of the model (number of lags).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

For the calculation of the MVGC, inputs needed are G (autocovariance sequence), x (vector of indices of target (causee) multi-variable) and y (vector of indices of source (causal) multi-variable). The MVGC calculation, extracts the coefficients with the VAR model of all variables (x, y, z) and then without the source (x,z). The output F is the Granger Causality itself.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

To calculate the coefficients:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | |

This is a preliminary version of the MVGC, we now need to test and validate in real data over the workflows and see if we can get some interesting results. Also, we have agreed that it would be useful to code the Pairwise Conditional Granger Causality (which was maybe the first step to make, before MVGC).