First of all, I want to anounce that I have enabled comments in the blog, so readers can comment on the entries. Let’s see if this new feature enriches the blog.

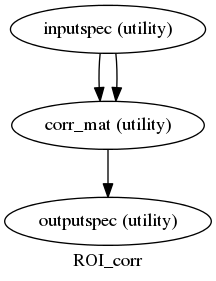

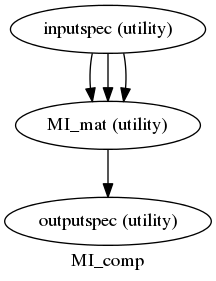

After having some troubles to get them working, we have the first two nipype workflows running. Both are pairwise calculations made over a series of signals selected by the user with a mask:

- Pairwise Correlation over timeseries given a mask.

- Pairwise Mutual Information over timeseries given a mask.

1 2 3 4 5 6 7 8 9 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

In both of them a fMRI file and a mask are needed, and for the MI calculation a variable “bins” is also needed. This variable is the number of states that the user wants the signals to be discretized to.

This brings up a new problem; how many states should we choose to discretize? We are working on this question and discussing whether we should introduce a measure that optimizes the number of bins/states to choose, between other fitting entropy or correlation formalisms. Seems like we could implement some tests to choose a proper “number of states”, we will have a further debate.

The discretization is done with the following helper function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

Also, when testing the workflows, we encountered another problem. While running the scripts by our own with the code alone, we had the results as we were expecting; but, when building and running the workflows, we can not get the results. We need to investigate on this, since is the basis of many things.

To do:

- Solve the problems with workflows.

- Try real data over the workflows and see if we can get some interesting results.

- Implement further synchronization analysis measures.